31. May 2022

Why Can't Developers and Testers Just Get Along?

The relationship between software testers and software developers is fraught with tension. More often than not, it's defined by condescension and grudging dependence on both sides.

On one hand, testers sometimes suffer from the widely-held idea that testing software is generally a less difficult or demanding task than developing software. This is exacerbated by many companies making the "rise" from QA engineer to "real" software engineer a big step on their internal career ladder.

Testers also frequently have to petition development for "extra" features such as backwards compatibility and accessibility descriptors, which are at times perceived by developers as superfluous and tedious to implement.

Developers on the other hand often only hear from QA if there is a perceived problem with their work. Nobody likes to hear they made a mistake, and not everyone can always handle criticism gracefully.

But developers actually depend on the diligence and knowledge of the QA department. I have personally witnessed how developers can build "technically correct" software without actually understanding the underlying use case. This results in idiosyncrasies in the software that are hard to spot without intimate knowledge of the business domain.

The question is: What can software businesses do to ease this tension?

Can we make building software so easy everyone can do this?

One idea might be to try and make traditional "software development" skills unnecessary for building new applications.

This was the aim of the "Low Code" movement. The idea, sometimes also called "visual programming" was to build "meta programs", toolkits for building software for a specific domain out of visual blocks, templates, and maybe simple scripts.

This was motivated by the ongoing shortage of software engineers. This shortage leads to companies being unable to progress the software they use or sell at a sufficient pace to keep up with demand and competition.

Instead of building each piece of software in code from scratch, software testers (or even managers and end-users) would be able to use a "no code" or "low code" tool to piece together new software for their needs from existing building blocks, reducing the job of software engineers to the programming of the initial tool.

This resulting software would still need to be thoroughly tested, of course, to make sure it meets the initial specifications. But the skill set of the software tester, with their intimate knowledge of what the software should do, is suited well to both building and testing low-code software.

But the dream of making building software as easy as editing a spreadsheet has largely failed.

Most software requirements are, in the details, too complex and specific to fit into any modular system. In the best case, it's possible to build software that kind of meets the original specs, but is filled with compromises, dumbed down and clunky to use. Software from a modular system will always require its users to adapt to it, instead of serving its users.

Some of these "low code" ideas have stuck around in modern website building services and some process automation tools, and found success in these limited scopes.

But they are by far not the magic bullet to enable testers to get by without relying on developers.

Can we make testing software so convenient every developer can do this?

So, what about resolving our tester-developer-conundrum in the other direction? Can we make testing software so convenient for developers that they can take care of it by themselves?

Most software developers today know about the importance of testing. Many even use testing as a core part of their development workflow, as in test-driven development.

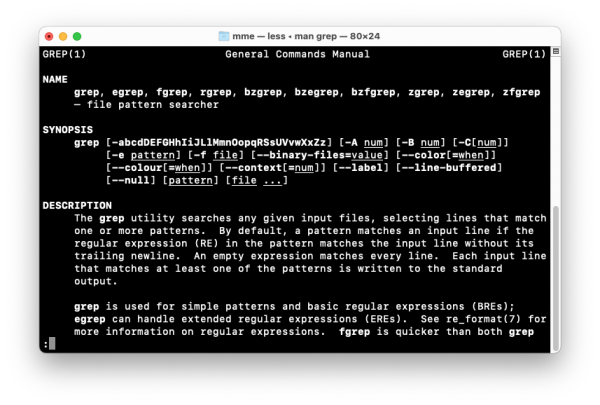

But developers prefer the kinds of tests that they can write, run, and maintain with their accustomed tools. Developers write tests in code; unit tests that run quickly and headlessly.

And developers usually only test the code they are working on and are responsible for. Rarely does it naturally fit into a developer's workflow to write integration tests between systems from different developers or teams, to say nothing of end-to-end tests.

Test-driven development also usually does not care about behavior on other hardware or operating systems, or on legacy hardware or software. "Works on my machine" is the mantra for the types of tests developers write to aid in their development.

If they are solely responsible for testing, developers will also tend to avoid writing code that is difficult to test with their preferred testing methods. Most importantly, this means they will write as little UI code as possible and avoid statefulness wherever they can.

The end result is software like a command line interface: technically correct, but hard to use and understand.

Can we meet in the middle?

What we have just explored are not two solutions, but more like two software quality nightmare scenarios. What we actually should do is try to combine the best qualities of both approaches.

We need two things for this: Communication and respect between testers and developers.

The problem between testers and developers are not technical, they are organizational. And so they need an organizational solution.

Companies should foster a positive relationship between testers and developers. QA and Dev teams should be encouraged to work together and go a mile in each other's shoes. Developers must be made aware of the tools and workflows QA uses for their work. Testers should gain at least a cursory understanding of the way the software is built. And companies should be structured in a way as to not encourage competition between teams, but communicate that everyone is working towards a common goal.

I know this is much easier said than done. But the divide between testers and developers is not as big as it may seem at times. If each is free to bring their skills and their focus to the table, together, we can build great software.

Advantages of QF-Test for developers and advantages for testers